#Assignment-1 Create log of the return data( way 1- log (st-st-1)/(st-1)

> #Historical volatility calculate.

> #Create acf plot for log(returns ) data and adf and interpret. NSE nifty index(from jan2012 to 31 jan 2013)

Program:

> z<-read.csv(file.choose(),header=T)

> closingprice<-z$Close

> closingprice.ts<-ts(closingprice,frequency=252)

> laggingtable<-cbind(closingprice.ts,lag(closingprice.ts,k=-1),closingprice.ts-lag(closingprice.ts,k=-1))

> Return<-(closingprice.ts-lag(closingprice.ts,k=-1))/lag(closingprice.ts,k=-1)

> Manipulate<-scale(Return)+10

> logreturn<-log(Manipulate)

> acf(logreturn)

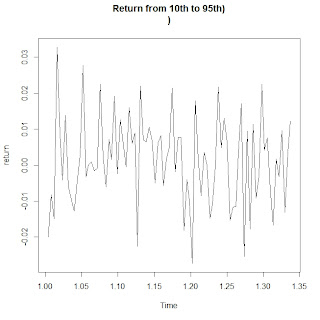

From the figure it implies that the all the standard errors are within the 95% confidence interval and hence we can

say that the time series is stationary.

>T<-252^.5

>Historicalvolatility<-sd(Return)*T

> Historicalvolatility

[1] 0.1475815

> adf.test(logreturn)

Augmented Dickey-Fuller Test

data: logreturn

Dickey-Fuller = -5.656, Lag order = 6, p-value = 0.01

alternative hypothesis: stationary

Warning message:

In adf.test(logreturn) : p-value smaller than printed p-value

Since p-value is less than (1-.95) ,therefore we can say null hypothesis is rejected and hence the time series is stationary so data analysis can be done.

> #Historical volatility calculate.

> #Create acf plot for log(returns ) data and adf and interpret. NSE nifty index(from jan2012 to 31 jan 2013)

Program:

> z<-read.csv(file.choose(),header=T)

> closingprice<-z$Close

> closingprice.ts<-ts(closingprice,frequency=252)

> laggingtable<-cbind(closingprice.ts,lag(closingprice.ts,k=-1),closingprice.ts-lag(closingprice.ts,k=-1))

> Return<-(closingprice.ts-lag(closingprice.ts,k=-1))/lag(closingprice.ts,k=-1)

> Manipulate<-scale(Return)+10

> logreturn<-log(Manipulate)

> acf(logreturn)

From the figure it implies that the all the standard errors are within the 95% confidence interval and hence we can

say that the time series is stationary.

>T<-252^.5

>Historicalvolatility<-sd(Return)*T

> Historicalvolatility

[1] 0.1475815

> adf.test(logreturn)

Augmented Dickey-Fuller Test

data: logreturn

Dickey-Fuller = -5.656, Lag order = 6, p-value = 0.01

alternative hypothesis: stationary

Warning message:

In adf.test(logreturn) : p-value smaller than printed p-value

Since p-value is less than (1-.95) ,therefore we can say null hypothesis is rejected and hence the time series is stationary so data analysis can be done.