> # Assignment 1: Create 3 vectors, x, y, z and choose any random values for them, ensuring they are of equal length,T<- cbind(x,y,z)

> #Create 3 dimensional plot of the same

> sample<-rnorm(50,25,6)

> sample

[1] 23.10381 25.85777 22.04959 43.53180 12.11174 37.23922 38.92648 22.77181 17.80844 30.41365 32.32586 37.09651 24.55097 19.85470 31.01534 29.70007 31.72610 22.26199

[19] 19.85826 36.94503 23.50247 18.00116 24.50004 27.57822 20.34054 17.32243 30.26892 19.03535 16.14514 28.81016 29.45099 23.10639 25.49178 35.95906 19.35419 23.04064

[37] 25.20819 18.83031 30.75433 19.14759 28.11077 25.91251 28.03618 33.34057 30.19792 25.07813 25.08856 26.12123 24.15002 22.09888

> x<-sample(sample,10)

> y<-sample(sample,10)

> z<-sample(sample,10)

> x

[1] 22.77181 31.01534 27.57822 22.09888 24.15002 19.85826 28.03618 17.32243 31.72610 19.35419

> y

[1] 19.85826 26.12123 30.19792 23.10639 31.72610 22.04959 24.55097 27.57822 28.03618 31.01534

> z

[1] 28.11077 31.72610 28.81016 12.11174 30.41365 23.50247 24.55097 22.04959 29.45099 30.26892

> T<-cbind(x,y,z)

> T

x y z

[1,] 22.77181 19.85826 28.11077

[2,] 31.01534 26.12123 31.72610

[3,] 27.57822 30.19792 28.81016

[4,] 22.09888 23.10639 12.11174

[5,] 24.15002 31.72610 30.41365

[6,] 19.85826 22.04959 23.50247

[7,] 28.03618 24.55097 24.55097

[8,] 17.32243 27.57822 22.04959

[9,] 31.72610 28.03618 29.45099

[10,] 19.35419 31.01534 30.26892

> plot3d(T)

plot3d(T,col=rainbow(1000))

plot3d(T,col=rainbow(1000),type='s')

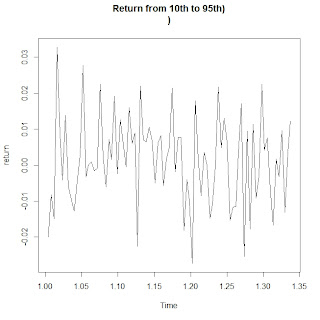

> #Assignment no 2: Create 2 random variables Create 3 plots:

> #1. X-Y ,X-Y|Z (introducing a variable z and cbind it to x and y with 5 diff categories)

> x<-rnorm(1500,100,10)

> y<-rnorm(1500,85,5)

> z1<-sample(letters,5)

> z2<-sample(z1,1500,replace=TRUE)

> z<-as.factor(z2)

> t<-cbind(x,y,z)

> qplot(x,y)

qplot(x,z)

qplot(x,z,alpha=I(1/10))

qplot(x,y,geom=c("point","smooth")) qplot(x,y,colour=z)

qplot(log(x),log(y),colour=z)

> #Create 3 dimensional plot of the same

> sample<-rnorm(50,25,6)

> sample

[1] 23.10381 25.85777 22.04959 43.53180 12.11174 37.23922 38.92648 22.77181 17.80844 30.41365 32.32586 37.09651 24.55097 19.85470 31.01534 29.70007 31.72610 22.26199

[19] 19.85826 36.94503 23.50247 18.00116 24.50004 27.57822 20.34054 17.32243 30.26892 19.03535 16.14514 28.81016 29.45099 23.10639 25.49178 35.95906 19.35419 23.04064

[37] 25.20819 18.83031 30.75433 19.14759 28.11077 25.91251 28.03618 33.34057 30.19792 25.07813 25.08856 26.12123 24.15002 22.09888

> x<-sample(sample,10)

> y<-sample(sample,10)

> z<-sample(sample,10)

> x

[1] 22.77181 31.01534 27.57822 22.09888 24.15002 19.85826 28.03618 17.32243 31.72610 19.35419

> y

[1] 19.85826 26.12123 30.19792 23.10639 31.72610 22.04959 24.55097 27.57822 28.03618 31.01534

> z

[1] 28.11077 31.72610 28.81016 12.11174 30.41365 23.50247 24.55097 22.04959 29.45099 30.26892

> T<-cbind(x,y,z)

> T

x y z

[1,] 22.77181 19.85826 28.11077

[2,] 31.01534 26.12123 31.72610

[3,] 27.57822 30.19792 28.81016

[4,] 22.09888 23.10639 12.11174

[5,] 24.15002 31.72610 30.41365

[6,] 19.85826 22.04959 23.50247

[7,] 28.03618 24.55097 24.55097

[8,] 17.32243 27.57822 22.04959

[9,] 31.72610 28.03618 29.45099

[10,] 19.35419 31.01534 30.26892

> plot3d(T)

plot3d(T,col=rainbow(1000))

plot3d(T,col=rainbow(1000),type='s')

> #Assignment no 2: Create 2 random variables Create 3 plots:

> #1. X-Y ,X-Y|Z (introducing a variable z and cbind it to x and y with 5 diff categories)

> x<-rnorm(1500,100,10)

> y<-rnorm(1500,85,5)

> z1<-sample(letters,5)

> z2<-sample(z1,1500,replace=TRUE)

> z<-as.factor(z2)

> t<-cbind(x,y,z)

> qplot(x,y)

qplot(x,z)

qplot(x,z,alpha=I(1/10))

qplot(x,y,geom=c("point","smooth")) qplot(x,y,colour=z)

qplot(log(x),log(y),colour=z)